by Jonathan Giles | Jul 7, 2010 | Language Lesson, Performance

Sure, there are plenty of tips discussing how to use sequences in JavaFX, but the one I wanted to cover quickly today is regarding the fact that sequences are immutable. That is, once you create them, their content can’t change.

‘You lie!‘ I hear you all shout, running off to your IDE’s to show me the following fully legal and compiler-friendly code:

[jfx]

var seq = [ 1, 2, 3 ];

insert 4 into seq;

insert 5 into seq;

delete 1 from seq;

println(seq);

[/jfx]

If you run this code, the output will be [ 2, 3, 4, 5 ], as expected. It’s understandable if you think the sequence is being updated in-place – it certainly looks that way from where we’re all standing – except that under the hood it isn’t, and there are performance implications we need to be clear on. To get straight to the point, the insert and delete instructions create a brand new sequence instance, but fortunately they also ensure that the seq variable always refers to the new instance, quietly leaving the old instance to get garbage collected.

Of course, as I just noted, from a developers perspective we can treat seq as a reference to a sequence instance and be oblivious to these ‘behind-the-scenes’ sequence shenanigans. There is nothing wrong with this, but it’s important to know that creating sequences isn’t free, and certainly minimising the amount of sequences we create is a smart idea.

To be slightly more technical, I should note that there are some exceptions to this ‘sequences are immutable’ rule when dealing with non-shared sequences of specific types, but I don’t want to muddy the point of this blog, which is….

Use the expression language features of JavaFX to reduce the number of sequence instances you need to create.

For example, you might have code that looks like the following:

[jfx]

var seq:Integer[];

for (i in [1..MAX]) {

insert i*i into seq;

}

[/jfx]

I hope, with this blog post firmly at the forefront of your mind, you can now see why perhaps this isn’t such a great idea: we’re creating MAX sequence instances, one for each iteration of the for loop – ouch. Importantly, this example isn’t far-fetched and made to prove a point: I just found a very similar example in my code the other day (which I promptly fixed up), and even found a few blog posts on this site and other sites that use this approach. What you actually should do is something like the following:

[jfx]

var seq:Integer[];

seq = for (i in [1..MAX]) {

i*i

}

[/jfx]

In this case rather than create MAX instances of the sequence, we’re creating only one – the result of the for expression. This is considerably more efficient, and can lead to huge performance gains, depending on what you’re doing. In my case it made the difference between my code being usable and being jittery. Obviously in my case this was a very critical for loop, and it may be similar in your use cases also.

I hope that this helps you eek out a little more performance from your apps. Now, go forth and optimise your sequences 🙂

by Jonathan Giles | Jun 26, 2010 | Controls, Performance, UI Design

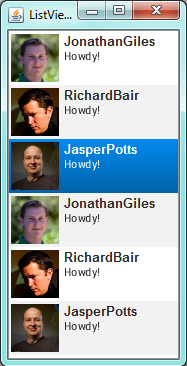

Ok, I know we’ve been going on about custom cells / cell factories a bit recently, but I wanted to do one more post about a very useful topic: caching within cell content.

These days ‘Hello World’ has been replaced by building a Twitter client, so I’ve decided to frame this topic in terms of building a Twitter client. Because I don’t actually care about the whole web service side of thing, I’ve neglected to implement the whole ‘real data’ / web services aspect of it. If you want to see an actual running implementation with real data, have a look at William Antônio’s Twitter client, which is using this ListCell implementation.

(more…)

by Richard Bair | Sep 25, 2009 | API Design, Controls, Performance

When you have a lot of data to display in a Control such as a ListView, you need some way of virtualizing the Nodes created and used. For example, if you have 10 million data items, you don’t want to create 10 million Nodes. So you create enough Nodes to fill the display dynamically. Because of our heritage in Swing, we know how critical this is for real apps. I got an optimization issue reported this morning on “UI Virtualization”. (more…)

by Richard Bair | Sep 15, 2009 | Performance

We’re in the middle of a huge performance push on JavaFX attacking the problem from many different angles. The compiler guys (aka: Really Smart Dudes) are fixing a lot of the long standing problems with binding. The graphics guys (aka: Really Hip Smart Dudes) are engaged in writing our lightweight hardware accelerated backend story. I’m working with Kevin Rushforth and Chien Yang on attacking the performance issues in the JavaFX scenegraph (which in large measure are impacted by the compiler work, so we’re working closely with them).

Today I was dredging up an old issue we’d looked at earlier this year regarding insertion times, and specifically, some really radically bad insertion times into Groups. It turns out to be timely as Simon Brocklehurst was encountering this very issue. This post will go into a bit more depth as to what is currently going on, what we’re doing about it, and some other cool / interesting tidbits.

So first, some code:

java.lang.System.out.print("Creating 100,000 nodes...");

var startTime = java.lang.System.currentTimeMillis();

var nodes = for (i in [0..<100000]) Rectangle { };

var endTime = java.lang.System.currentTimeMillis();

println("took {endTime - startTime}ms");

java.lang.System.out.print("Adding 100,000 nodes to sequence one at a time...");

startTime = java.lang.System.currentTimeMillis();

var seq:Node[];

for (n in nodes) {

insert n into seq;

}

endTime = java.lang.System.currentTimeMillis();

println("took {endTime - startTime}ms");

java.lang.System.out.print("Adding 100,000 nodes to group one at a time...");

startTime = java.lang.System.currentTimeMillis();

var group = Group { }

for (n in nodes) {

insert n into group.content;

}

endTime = java.lang.System.currentTimeMillis();

println("took {endTime - startTime}ms");

group.content = [];

java.lang.System.out.print("Adding 100,000 nodes to group all at once...");

startTime = java.lang.System.currentTimeMillis();

var group2 = Group { }

group2.content = nodes;

endTime = java.lang.System.currentTimeMillis();

println("took {endTime - startTime}ms");

In this test we go ahead and create 100,000 nodes (if you run this at home be sure to bump up memory to accommodate — the compiler work going on will make it so this fits in memory but for now we have to increase the heap). We then have 3 tests. The first one adds the nodes, one at a time, to a plain sequence. The second adds the nodes, one at a time, to a Group (and to try to keep things fair, the Group isn’t in a scene). And the third test adds all the nodes to the Group in one go.

Here are the numbers I recorded:

Creating 100,000 nodes...took 13321ms

Adding 100,000 nodes to sequence one at a time...took 39ms

Adding 100,000 nodes to group one at a time...took 1203783ms

Adding 100,000 nodes to group all at once...took 213ms

Ouch! 1,203 seconds (or about 20 minutes) to insert nodes one at a time into the group’s content, whereas it took only 39ms to fill up a plain old sequence. The second Ouch! is that it took 13s to create this many nodes. By comparison, creating the node “peers” (which is an implementation detail, but basically the rendering pipeline representation of the node) only took a half second.

So first, there is clearly some work to do on startup and I’m confident we’ll get that sorted, it isn’t rocket science. Just gotta reduce redundant work. Check.

So how about that second part? Well, for reference, I wrote the same test in pure Java talking to the swing-based node peers directly. The numbers for that:

Creating 100,000 nodes...took 495ms

Adding 100,000 nodes to sequence one at a time...took 10ms

Adding 100,000 nodes to group one at a time...took 47ms

Adding 100,000 nodes to group all at once...took 122ms

Ya, so obviously there is a big difference between 47ms and 20 minutes. 47ms represents something we know we can get close too — after all, we have already done so in the swing-based peer. There are, however, two big things that are different between the FX Group code and the peer Group code. The FX Group has checks for circularity and also for duplication whereas the peer does not (since it knows the FX side has already handled the problem).

Commenting out the circularity check and the duplication check gets us from 20 minutes down to about 21 seconds. Still several orders of magnitude too long, but a heck of a lot better. There are various other things going on in the FX Group code that we could single out too, and in the end get really close to 40ms.

So, what does this mean? One option is to throw all semblance of safety out the window, giving developers / designers all kinds of rope and letting them hang themselves. Which probably isn’t a good way to treat your users. Another option is to optimize the checks as best we can. While that is probably going to give some win, it won’t give the big win.

Probably the best answer (and I have yet to prove it) is to simply defer the work, sort of batching it up behind the scenes. Basically, suppose you insert 100 nodes into a group, one at a time, but never ask for the group content. What if we were to defer circularity checks and so forth (actually, defer nearly all the work) until the group’s content was read. This would allow us to still have the nice checks, but would defer error reporting (potentially bothersome) until the value is read, but would give us the performance of a batched up insert. And that would be closer to 213ms than 20 minutes.

That’s the idea anyway, I’ll see if I can make it work. Even so I bet we could take that 213 and cut it in half (remember 40ms of it is being eaten by the backend peer, so if we got it down to 80ms we’d be smokin’).

Update

I’ve been doing more work on this and have a prototype that indeed gets the insert times for 100,000 nodes down from 23 minutes to 200ms. I do it without batching up the changes, but by simply making the duplicate checks more efficient. There is also a bit of trickery related to convincing the compiler not to create a duplicate of oldNodes sequence — a topic for another day.